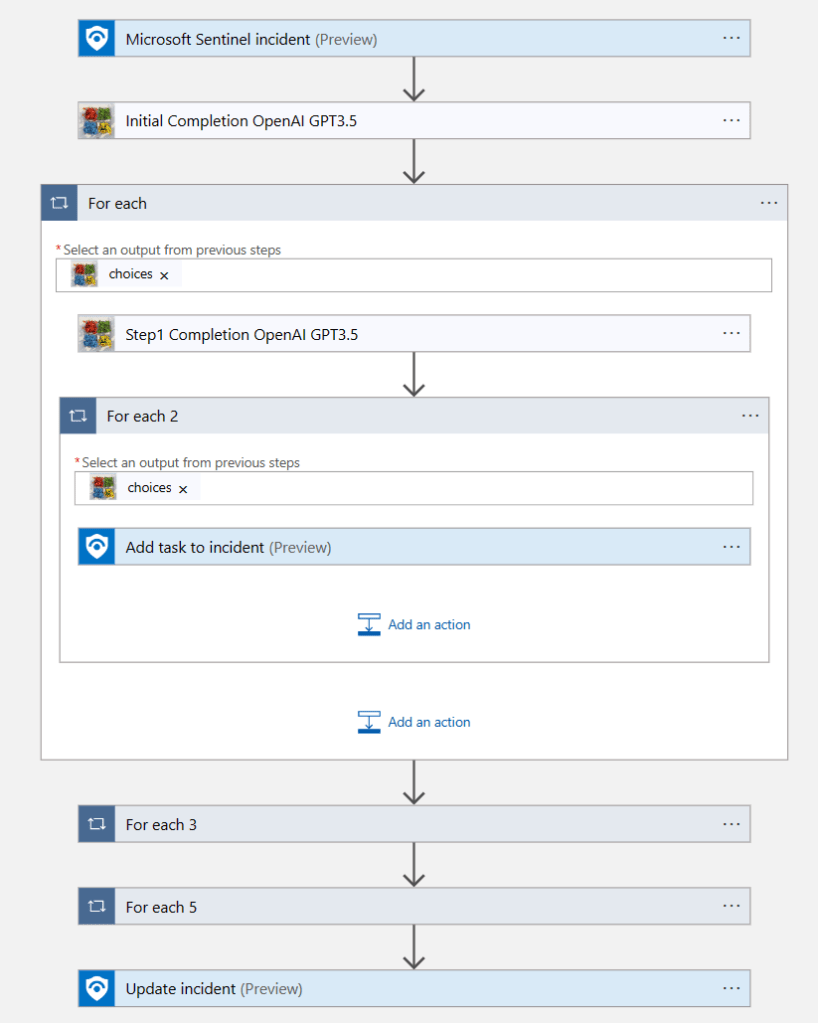

TL;DR – Managing lists globally and locally, i.e., on a customer-by-customer basis, using watchlists and externaldata.

A recurring requirement from MSSPs is a global watchlist that can be referenced by all their customers within specific analytic rules and the data contents can only be modified by the MSSP. One scenario is for network IPs that MSSPs want to be alerted on when they show up within any customer workspace. An extension to that scenario is the ability to have an equivalent local watchlist that can be modified by customers, so they can add their own untrusted IPs or locations. And then further, sometimes it’s useful if we can combine that into one incident, so they can all be investigated together. This blog post is about my recommendations to partners to achieve this scenario.

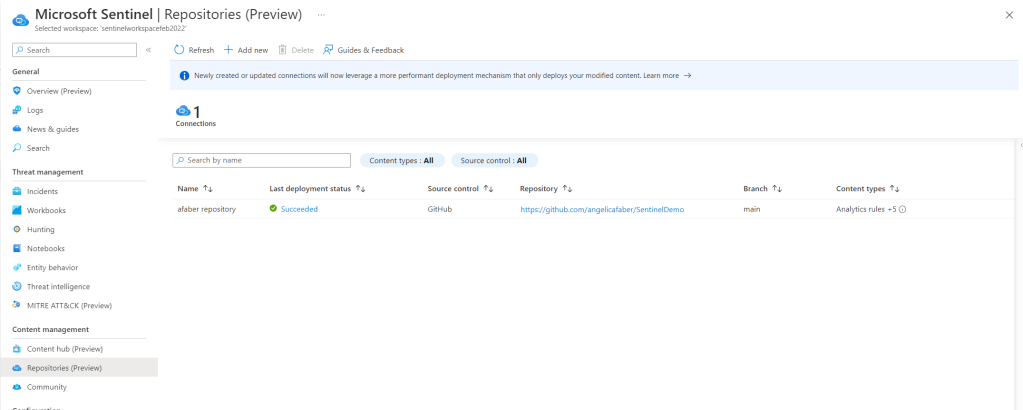

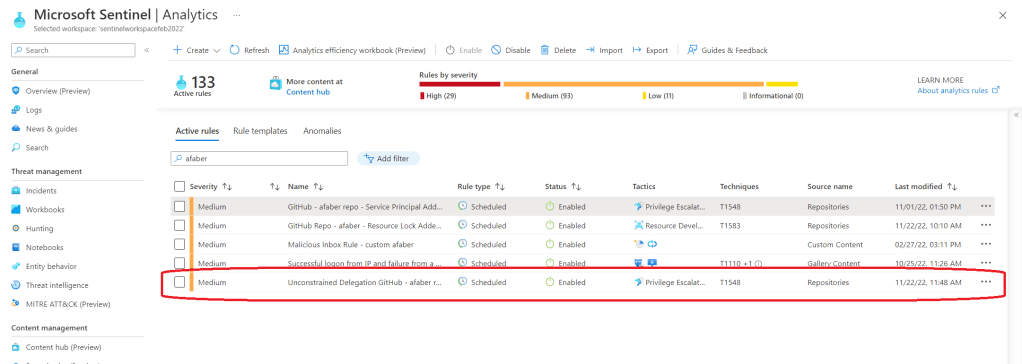

At this time there are some restrictions for watchlists, such as the fact that they can ‘only be referenced from within the same workspace‘. Also, you probably know that I am huge fan of both Repositories and Workspace Manager, but currently neither supports the ability to push watchlists to workspaces. However, even if they did, I think this scenario would still apply, because MSSPs likely want to completely block any modifications to that global watchlist.

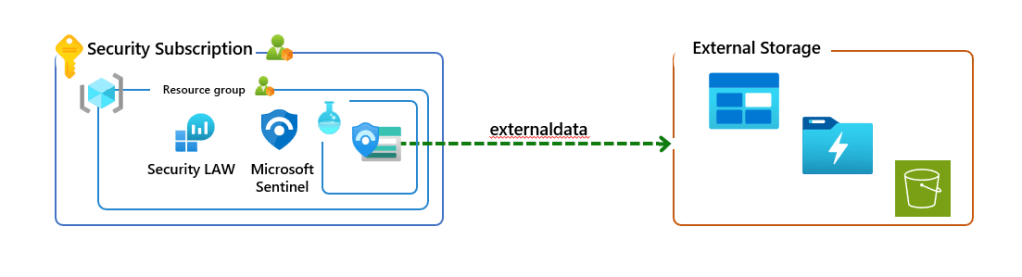

Therefore, the recommendation I provide to partners is to configure a global lookup table, which is not a Sentinel watchlist, instead it uses externaldata operator, combine that with a regular Sentinel watchlist, which will be the local lookup table, and then combine within a single analytic rule, if required.

The global watchlist (?)

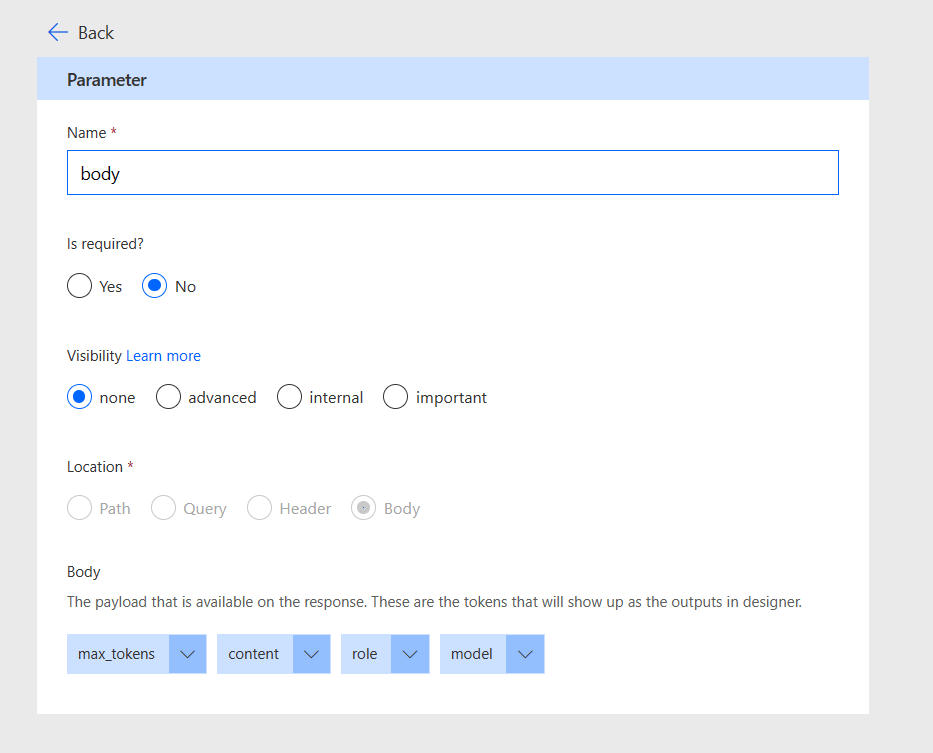

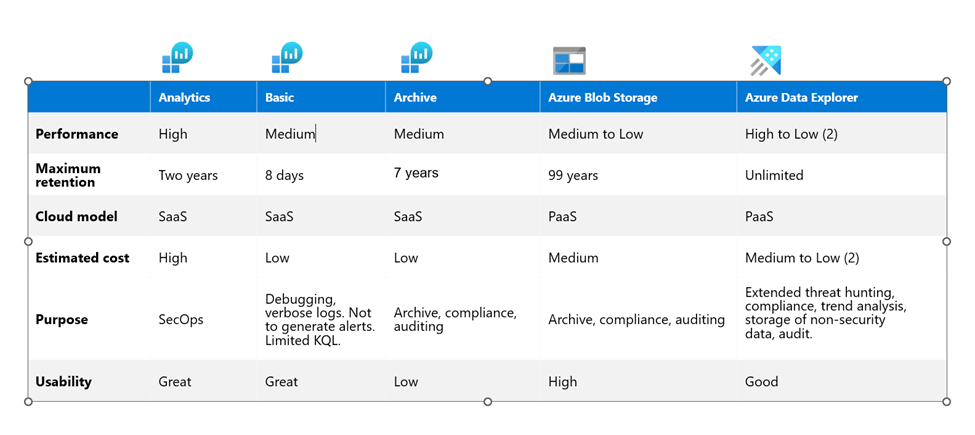

This is not a Sentinel watchlist. This is where the externaldata operator is used to point to an external storage file that the MSSP can configure to only be accessed by their team. Keep in mind that externaldata operator supports a specified list of storage services.

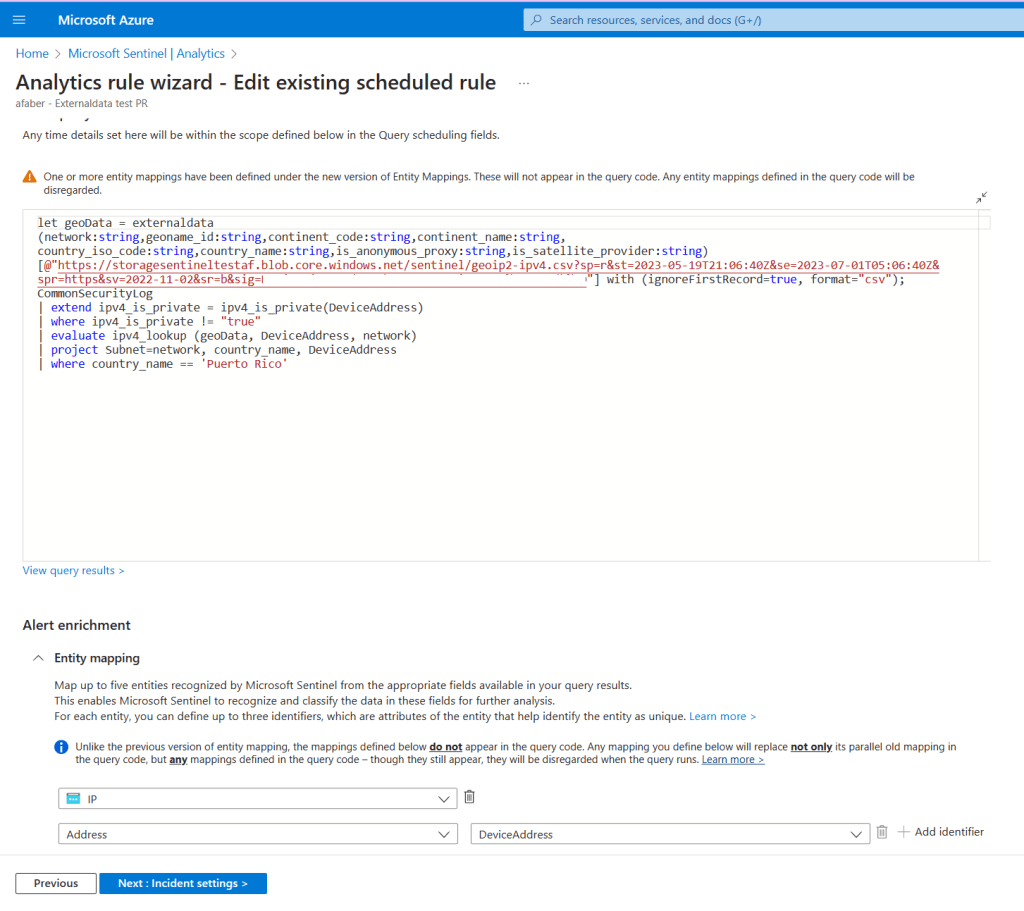

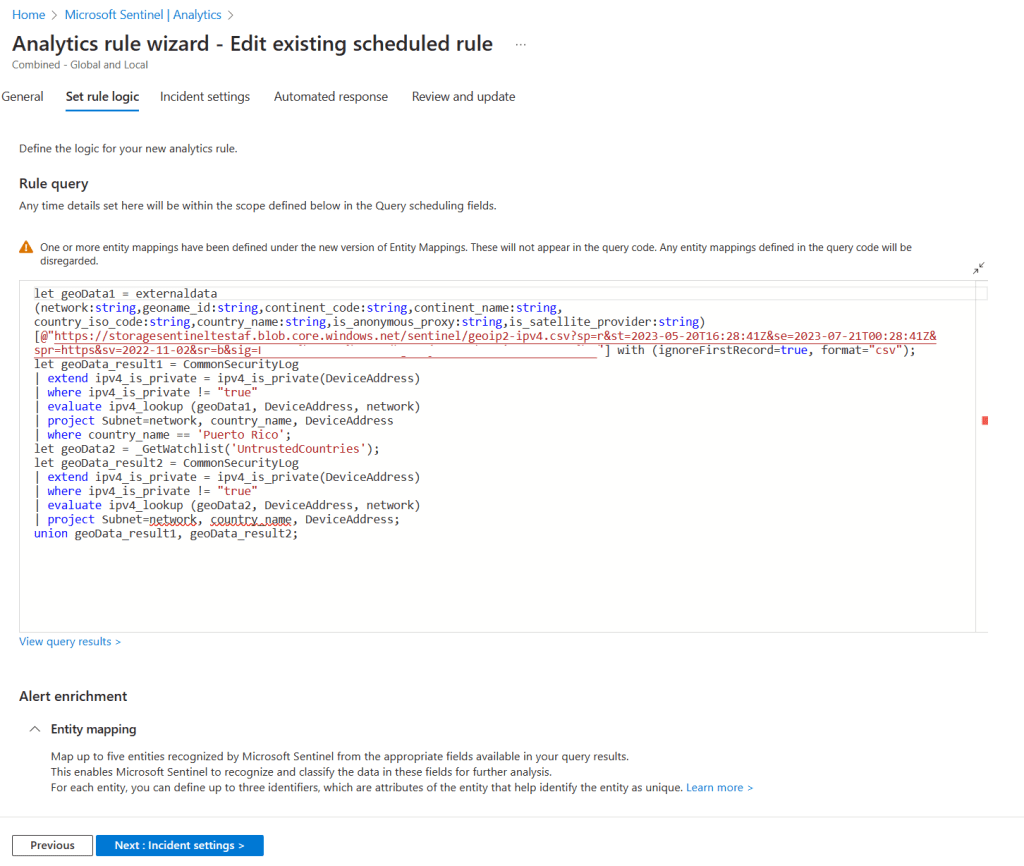

The analytic rule would look something like this:

In the rule above, I am using the externaldata operator pointing to an Azure Blob Storage file, which is one of the options supported. Specifically, I am using a SAS token and URL with a predetermined expiration date. Also, in the example above, I am generating alerts for a specific country, Puerto Rico, aka Isla del Encanto😊, because in my test scenario I don’t expect any connections from that location. This is obviously just an example.

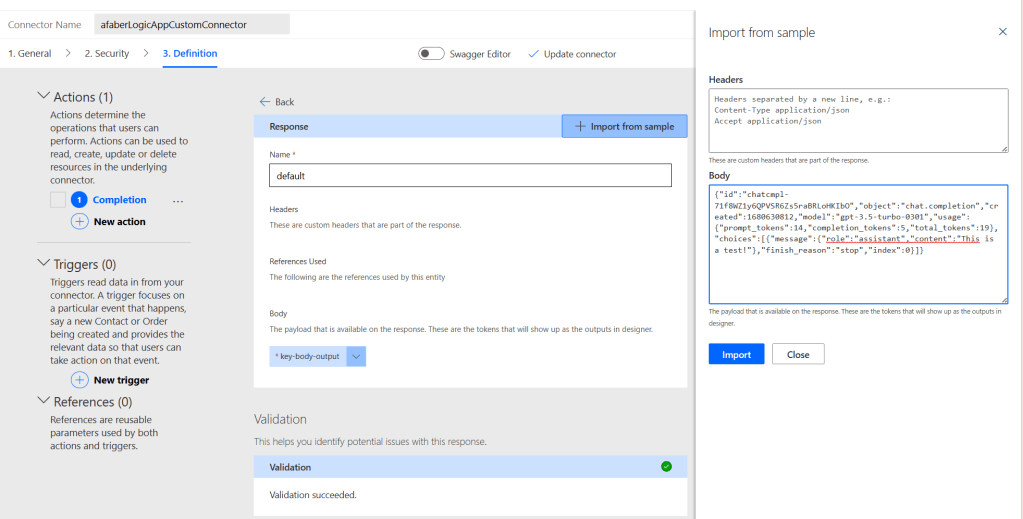

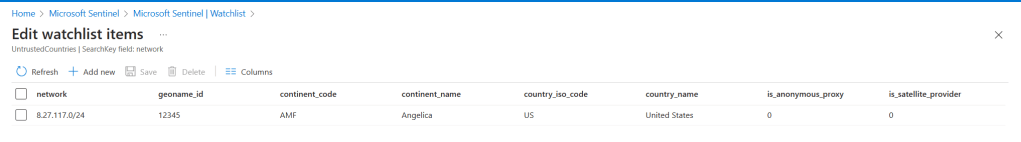

The local watchlist

This is where I would use a real Sentinel watchlist, which exists within each of the customers’ workspaces. This is not meant to be updated by the MSSP, so you just need to create it initially and then the customers can update it directly as needed. When the MSSP creates this watchlist it should be created with a specific name, in my example I used UntrustedCountries. Although looking at the contents now, I probably should have named it UntrustedIPRanges.

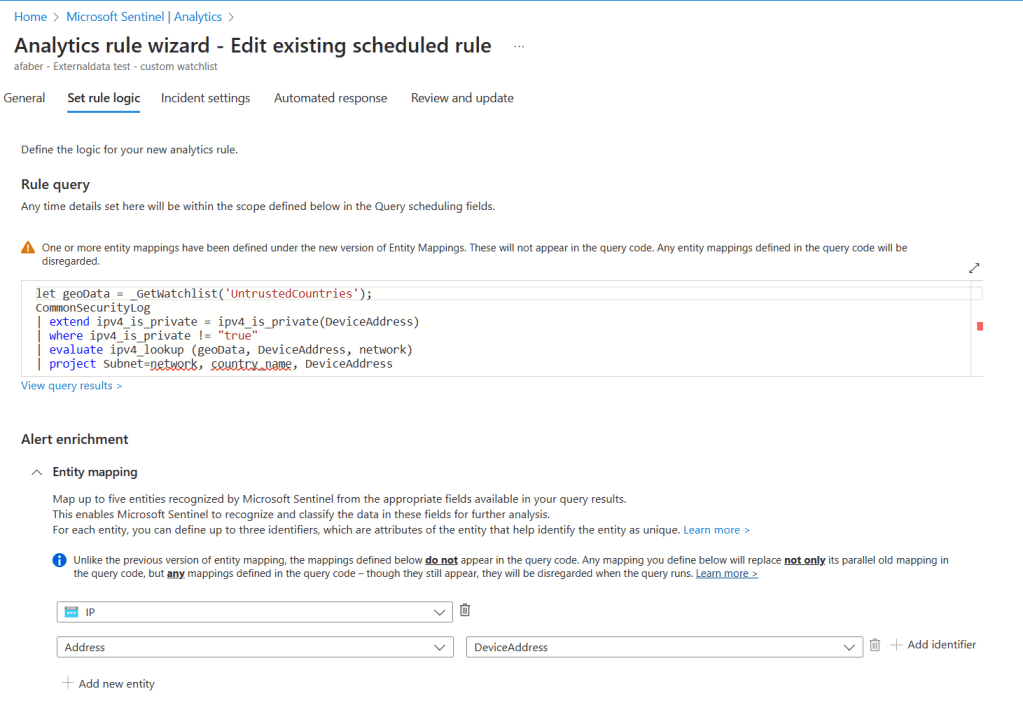

The analytic rule would look something like this:

Also, in the example above, I am generating alerts for a specific IP range, which happens to be located in the United States, because in my test scenario I don’t expect any connections from that location. This is obviously just an example.

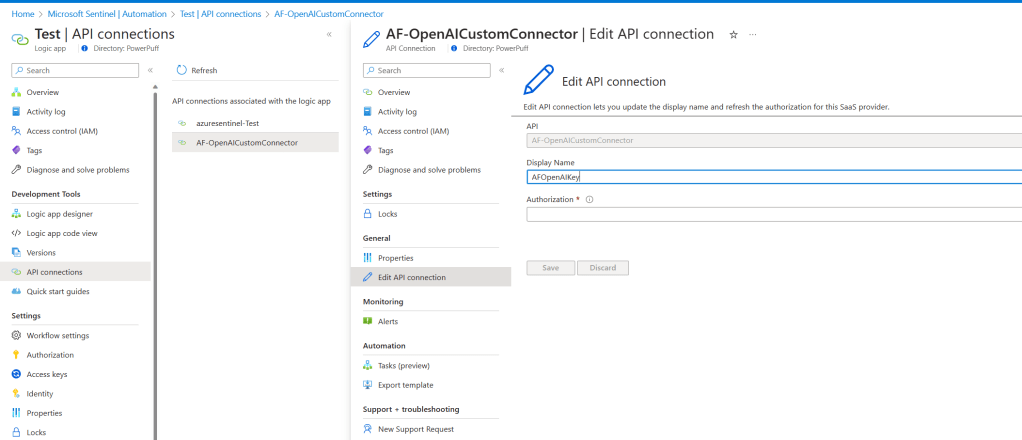

The combined analytic rule or correlation rule

I can just generate incidents based on those two separate rules, but sometimes it makes more sense to combine them. And technically, I have two options here:

1. I can just combine them into one analytic rule directly, as shown below.

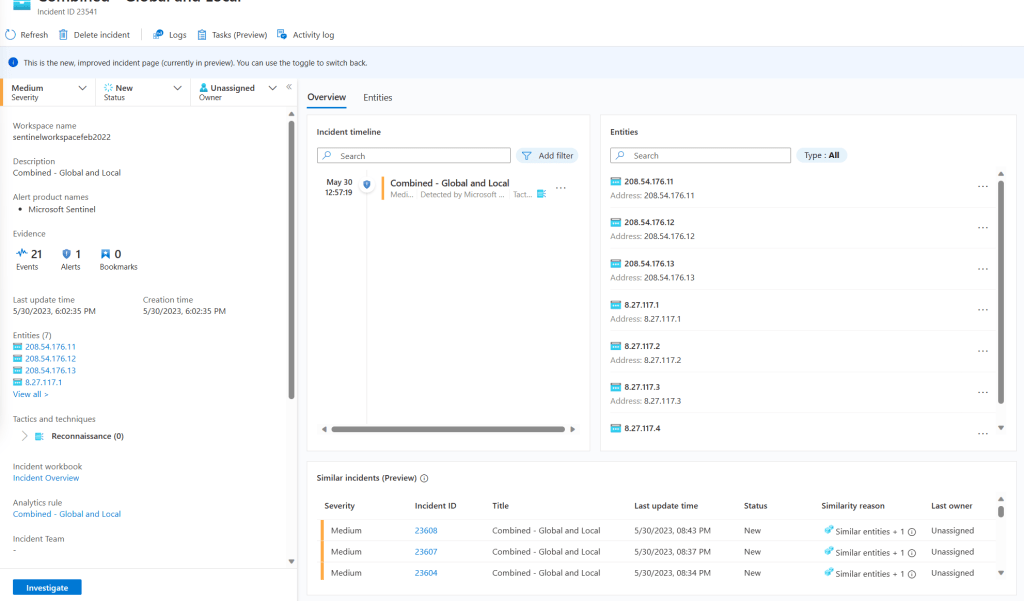

2. Or I can just set the other two analytic rules to not generate incidents and I can create an overarching correlation rule that generates incidents.

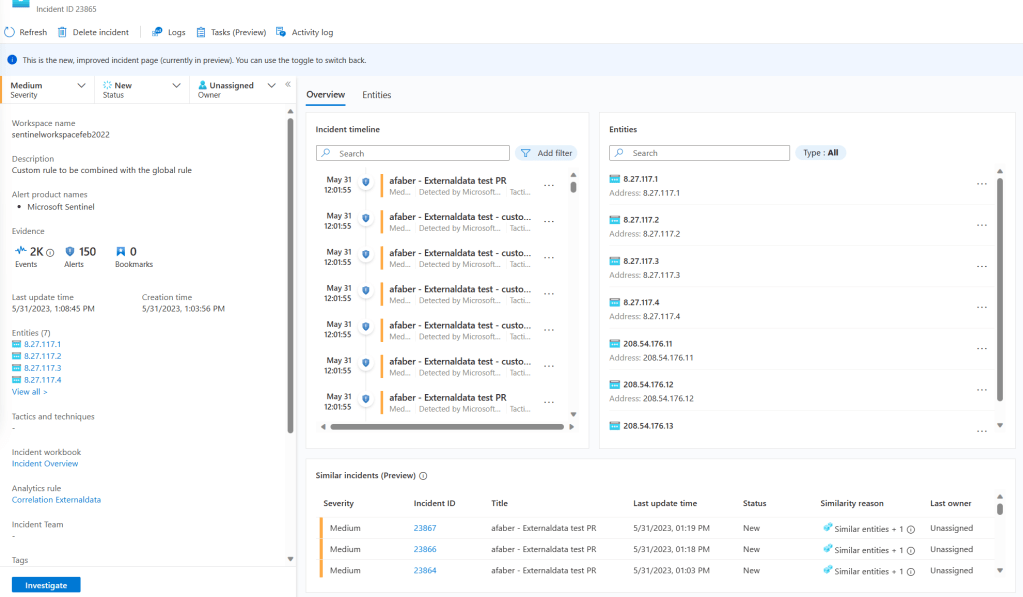

In the correlation example above, I configured Entity mapping to allow me to preserve the entities from the original alert. At the end the result is very similar with the incident created, specifically when it comes to the incidents showing the original entities. In this case the entities are the IPs that are associated with those alerts.

Also, is good to remember that MSSPs can still modify the name of the alerts, and how to group them or not group them as they would with any other analytic rule. That goes for either the combined one or the correlated rule.

Final thoughts

Some partners are concerned with the number of analytic rules, since there are limits per workspace, so the combined one may be a better option for some MSSPs. However, I wanted to provide both options, in case there are other constraints to take into account.

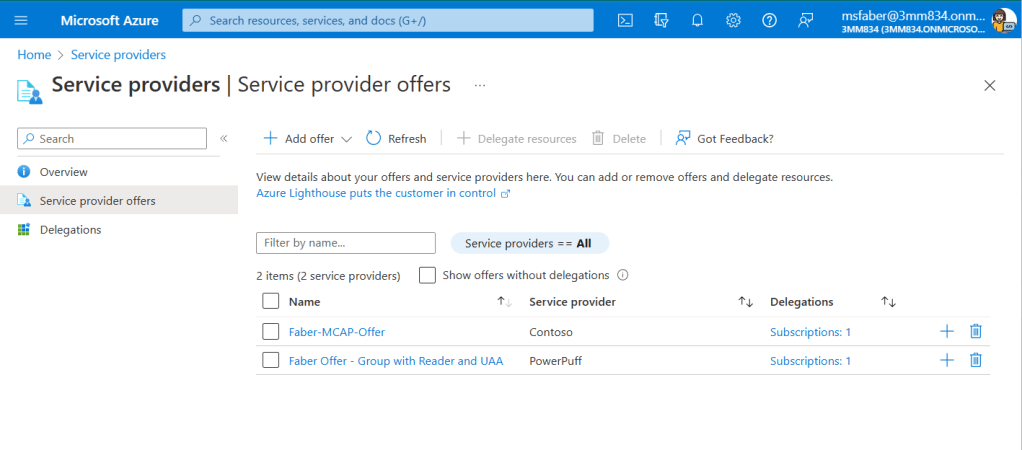

MSSPs can push these analytic rules to each of the customers’ workspaces and continuously keep them updated as needed using either Repositories or Workspace Manager. If you haven’t already tested these tools, I highly encourage you to do so. These tools are great to have in your MSSP toolbox!